- Thorndike's Law of Effect is the first statement about reinforcement.

- Notice is is not really a behavioral definition

- His major contributions however were his laws of learning, the most important of which was his law of effect (Thorndike, 1911, p. 244):

- "Of several responses made to the same situation, those which are accompanied or closely followed by satisfaction to the animal will, other things being equal, be more firmly connected with the situation, so that, when it recurs, they will be more likely to recur; those which are accompanied or closely followed by discomfort to the animal will, other things being equal, have their connections with that situation weakened, so that, when it recurs, they will be less likely to occur. The greater the satisfaction or discomfort, the greater the strengthening or weakening of the bond."

- Formula for operant is:

- SD-->R-->SR

- SD is a DISCRIMINATIVE STIMULUS

- it "sets the occasion" or informs the organism that a response will be followed by a SR or reinforcer

- SR is the reinforcer (see more info below)

- R is the response

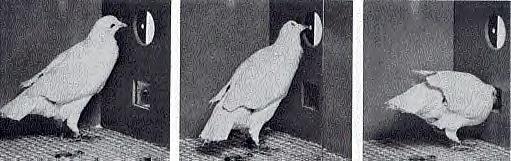

Pigeon operant chamber (both: "Skinner boxes")

- In operant conditioning a reinforced response is repeated (e.g., a hungry rat learns to press a lever and food follows, eventually it presses it over and over until it is no longer hungry)

- The paradigm for operant conditioning is:

- SD-->R-->SR

- where and SD is a discriminative stimulus, R is a response, and SR is a reinforcer.

- In operant conditioning, an animal must first make a response. Notice, that is not the case in classical conditioning.

- That response is usually preceded by a discriminative stimulus, and sometimes followed by a reinforcer.

- Operant conditioning occurs when the association of response and

reinforcer causes the animal to make the response again later in a

similar situation.

- The discriminative stimulus signals the animal that a response at a given time is likely to be reinforced.

- The response MUST be made, for without it, the reinforcement will not occur.

- Finally, the reinforcer has the property of making the response that precedes it more likely to recur.

- Example: Think of a traffic light.

- It's a complex discriminative stimulus.

- Green means go.

- Red means stop.

- Example: Imagine that you are in a future classroom and a green light appears on your console.

- Were you to raise your hand and ask a question, you would get a $20 bill.

- If you never raise your hand then no money.

- But, if you do raise your hand and get the money you'll probably raise your hand again.

- But, when you raise your hand when the green light is not on, nothing happens, no $20 bill.

- Soon, the green light comes on, you raise your hand and the money is dispensed.

- Now, that green light has become a discriminative stimulus.

- If it is on AND you raise your hand you'll get the money.

- If it is off and you raise your hand nothing happens.

- What do you think you'll do when that green light appears?

- The apparatus used to study operant conditioning removes many

distractors.

- An animal in an operant chamber will eventually direct its attention to the manipulandum (e.g., the lever or key) that activates the reinforcer.

- So, operant conditioning can be defined:

- As a procedure in which a response followed by a stimulus recurs.

- It recurs because of the stimulus.

- That stimulus is called a reinforcer.

- Reinforcers are stimuli that make the response that preceded them more likely to recur.

- Typical reinforcers include the giving of food or drink, and the removal of shock or pain.

- Other properties of reinforcers will be covered below.

- Discriminative stimuli set the occasion for a response and its

associated reinforcer to occur.

- So again, a green traffic light is the discriminative stimulus for the response of pressing on the accelerator of your car.

- But the facial expression of your boss may set the occasion for you NOT to ask your boss for a raise (because of a likely negative answer to your question).

- The paradigm for operant conditioning is:

- Reinforcement increases the response

- Positive reinforcement is delivered after the response and the response continues

- e.g., you raise you hand in class and $20 comes out of your desk. What do you do? (Raise hand again)

- Negative reinforcement is taken away after the response and the response continues

- Good examples: raise umbrella when it's raining (you are no longer wet);

- Take out trash when spouse nags you (nagging stops)

- Positive reinforcement is delivered after the response and the response continues

- Positive reinforcement is when a response is followed by the addition of a stimulus, and then that response is more likely to recur.

- Negative reinforcement, on the other hand, is when a response is

followed by the removal of a stimulus and then that response is more

likely to recur.

- Notice that negative reinforcement also makes the response more likely to recur.

- Let's revisit that hypothetical

classroom in the near future.

- Now, when you sit in your desk, you are subjected to electric shock. Whenever you are in your desk you are being shocked.

- One day you ask a question, and the shock disappears, briefly.

- You ask another question, and it disappears briefly again.

- Soon, you are asking a lot of questions.

- Your question asking is also being reinforced, but now by the removal of a stimulus, or by negative reinforcement.

- Discriminative Stimuli

- SD-stimulus signaling opportunity for reinforcement, pigeon and green light

- "Want a back rub?"

- SΔ -stimulus signaling the lack of opportunity for reinforcement, frown

- "I have such a headache" (SΔ is used to indicate that reinforcement will not follow.)

- Discriminative stimuli are the first step in a reinforcement situation

- SD -> R -> SR

- Or, discriminative stimulus, followed by response, followed by reinforcement

- Example: Red or Green traffic lights (another view of this by famous comic)

- SD-stimulus signaling opportunity for reinforcement, pigeon and green light

- Shaping

- Shaping is reinforcing successive approximatins of a final desired response.

- In the video below notice how Skinner gradually gets the pigeon to execute a full turn (the final desired response) by first reinforcing left turns, later only reinforcing half turns.

- The light tells the pigeon that reinforcement has been delivered.

- Notice how quickly the pigeon makes the full turn.

- Skinner could have just waited a long time for the pigeon to make a full turn and then reinforced it, but shaping makes that wait unecessary.

- Shaping, then, is a technique for getting to a final desired response more quickly.

- See Skinner shaping a pigeon in class (below)

- He rewards the pigeon with food for making more and more of a circle by turning to the left

- backing professor into a corner (not on test, but humorous)

- Primary and Secondary Reinforcement

- Primary: biologically relevant reinforcers such a food, water, pleasure, pain (there are only a few primary reinforcers and they are, essentially, the same as UCSs.

- Secondary: secondary reinforcers come to act as reinforcers when they are associated with primary reinforcers

- Consider the following sequence in dog training: "sit" (dog eventually sits)->"good dog" (give a treat, repeat)

- Eventually the dog will treat the words "good dog" as a reiforcer, a secondary reinforcer

- Most human behavior is reinforced by secondary reinforcers

- You, for example, will do things to increase your number of points in this class

- I'd bet many of you would wash my car for 10 points added to your score, right? (Too bad that's unethical)

- Money, by definition, is a secondary reinforcer (you can't eat it or drink it)

- Praise is secondary reinforcement too

- You, for example, will do things to increase your number of points in this class

- More on Primary and Secondary

Reinforcement

- Primary: fulfills biological need: food, drink, pleasure, pain (avoid it), disgust (not many primary reinforcers exist)

- Secondary: associated with primary (usually via classical conditioning) and they ALSO act as reinforcers:

- e.g., grades, money, praise (a large number of secondary reinforcers exist)

- Primary reinforcers are biological.

- Food, drink, and pleasure are the principal examples of primary reinforcers.

- But, most human

reinforcers are secondary, or conditioned.

- Examples include money, grades in schools, and tokens.

- Secondary reinforcers acquire their power via a history of

association with primary reinforcers or other secondary reinforcers.

- For example, if I told you that dollars were no longer going to be used as money, then dollars would lose their power as a secondary reinforcer.

- Another example would be in a token economy.

- Many therapeutic settings use the concept of the token economy.

- Remember, a token is just an object that symbolizes some other thing.

- For example, poker chips are tokens for money.

- Back in the day in New York City, subway tokens used to be pieces of metal that could be inserted into the turnstiles of the subway.

- Old NYC subway token, at one point worth $1.25, so four tokens = $5.00, but only in NYC

- Small debts were often paid off using tokens in New York because of the token's value of one subway ride.

- However, attempting to pay off debts elsewhere using NYC subway tokens would not be acceptable.

- In a token economy, people earn tokens for making certain

responses; then those tokens can be cashed in for privileges, food,

or drinks.

- For example, residents of an adolescent halfway house may earn tokens by making their beds, being on time to meals, not fighting, and so on.

- Then, being able to go to the movies on the weekend may require a certain number of tokens.

- Punishment decreases the response

- NOTE: Punishment is NOT the exact opposite of reinforcement because punishmnent arouses negative emotions while reinforcement does not arouse positive emotions

- Positive punishment is delivered after the response and the response stops

- e.g., your raise your hand in that future classroom and you get a shock. What do you do? (Not raise hand again.)

- Negative punishment is when something is taken away and the response stops

- e.g., you raise your hand in class and points toward your grade are taken away (like a fine). What do you do? (Not raise your hand again.

- Good examples: child smarting off, take away phone, defensive football player delivers illegal hit, sent out of game. (What does player do in subsequent games?)

- Positive punishment is delivered after the response and the response stops

- Punishment is when a stimulus that follows a response leads to a

lower likelihood of that response's recurring.

- Note that reinforcement, either positive or negative, leads to a higher likelihood of a response recurring.

- Positive punishment is when a response is followed by the addition

of a stimulus.

- Note that this scene

sometimes occurs in real classrooms.

- If a student asks a question and then hears something like,;

- "That's the stupidest question I ever heard" from the instructor,

- that student will likely not ask many questions in the future.

- Note that this scene

sometimes occurs in real classrooms.

- Negative punishment is when a response is followed by the removal

of an already present stimulus, and that leads to that response's

occurring less often.

- For example, in that future classroom again, if you asked a question and you had to reeturn one of your $20 bills every time you asked a question, you would probably quit asking questions.

- Although it might appear that reinforcement and punishment are

opposites, they are not.

- Punishment arouses negative emotions, (e.g., hate, disgust, loathing).

- But, reinforcement does not similarly arouse positive emotions like love, liking, and attraction.

- Think of

jilted suitors who ask why their partners left.

- They might wonder why their partners left even though they gave their partners expensive gifts.

- Those expensive gifts did not, in and of themselves, lead to love.

- Skinner (1953) offered three reasons why punishments should not be administered:

- they only work temporarily,

- they create conditioned stimuli that lead to negative emotional reactions,

- and they reinforce escape from the conditioned situation in the future.

- He wrote (pp. 192–193):

- Civilized man has made some progress in turning from punishment to alternative forms of control . . . But we are still a long way from exploiting the alternatives, and we are not likely to make any real advance so long as our information about punishment and the alternatives to punishment remains at the level of casual observation. As a consistent picture of the extremely complex consequences of punishment emerges from analytical research, we may gain the confidence and skill needed to design alternative procedures in the clinic, in education, in industry, in politics, and in other practical fields.

- NOTE: Punishment is NOT the exact opposite of reinforcement because punishmnent arouses negative emotions while reinforcement does not arouse positive emotions

- Schedules of

Reinforcement

- Skinner analyzed the effect of how reinforcers are delivered

- He found that the scheduling of reinforcent made a big difference

- There are many reinforcement schedules but the main ones are:

- Fixed Interval

- get a reinforcer after a fixed period (e.g., 10 seconds, one day, one week) of time provided response is made

- paycheck is an example

- Variable Interval

- get a reinforcer after a random period provide a response is made

- example: working for shady character who pays you on an irregular basis

- Fixed Ratio

- get a reinforcer after a fixed number of responses (e.g., 10, 50, 200)

- piecework is an example (e.g., sewing garments, get paid for each one made)

- Variable Ratio

- get a reinforcer after a variable and random number of responses

- gambling is good example, especially slot machines

- Examples

- The steeper the curve the stronger the response

- Fixed Interval

- One of the major discoveries of operant conditioning was that not

only do reinforcers have the power to cause responses to be made more

often, but that how and when those reinforcers are delivered also

affects the pattern of responses.

- Controlling the how and when of reinforcement is a reinforcement schedule.

- Schedules are of two main types, time-based and response-based.

- Time-based schedules usually contain the word interval, as in time interval.

- Response-based schedules usually contain the word ratio, referring to the ratio of responses over time.

- Fixed interval (FI) schedules reinforce any response made after a given interval measured from the preceding reiforcement is reinforced.

- A given interval is indicated by the addition of a number to the letters FI (seconds, usually).

- Thus, in FI 15 the first response which occurs fifteen seconds or more after the preceding reinforcement is reinforced.

- Variable interval (VI) schedules reinforce any response made after a variable amount of time.

- A VI 20 would reinforce after an average of 20 seconds, not every 20 seconds.

- Fixed ratio (FR) schedules deliver a reinforcer based upon

a constant number of responses.

- For example, a FR-10 schedule would deliver a reinforcer every 10th response.

- Variable ratio (VR) schedules are similar to fixed ratio,

except that the number of responses required for a reinforcer changes

each time.

- So, a VR-15 schedule would deliver a reinforcer over an average of 15 responses, not on every 15th response.

- Let's examine some everyday examples of reinforcement schedules

and their effects.

- A paycheck is a good example of an FI schedule. Workers get a check once a week, for example, if they show up and work. They do not get rewarded for working harder, or penalized for working less.

- Workers who work by the piece or by the job, piecework,

are paid more if they produce more, and are paid less if they produce

less.

- Piecework is an example of an FR schedule.

- Workers typically work harder on FR schedules than they do on FI schedules.

- Gambling is the classic example of a VR schedule.

- Part of the allure of gambling is its uncertain payoff.

- Imagine a slot machine that paid off every 10th time; only the 10th pull would be exciting.

- A real slot machine, on the other hand, pays off on a random basis, so each pull is exciting.

- VR schedules maintain behavior at very high rates.

- Gambling is also addictive, see below.

- About the best everyday example of a VI schedule that I can think

of is working for a shady character.

- This person pays you, but you never know when payday is going to be.

- It could be a week, two weeks, a month.

- So, you don't work very hard.

- You would probably jump to another job if the pay were the same but given regularly.

- B.F. Skinner

- Skinner's contribution was radical behaviorism, or more properly,

behavior analysis.

- Behavior analysis sidesteps issues of mind by

assuming environmental determininism and by including the

internal environment (self talk, covert verbal behavior) as part of

the environment.

- Thus, dualistic issues are resolved and each human becomes subject to a unique set of environmental determinants composed of both external and internal environments.

- Skinner revived Bacon's inductive method and his lack of theory.

- Operants, the behaviors emitted by organisms, are selected by the environment in a quasi-evolutionary way.

- Respondents, the behaviors caused by observable stimuli, were Skinner's term for Pavlovian or classical conditioning.

- Skinner explored the ramifications of operant conditioning both in the lab and in the field.

- Schedules of reinforcement, programmed instruction, and behavior modification were three of his most important contributions.

- Behavior analysis sidesteps issues of mind by

assuming environmental determininism and by including the

internal environment (self talk, covert verbal behavior) as part of

the environment.

- Skinner's contribution was radical behaviorism, or more properly,

behavior analysis.

- Comments

- Skinner expanded on the work of Pavlov and Watson by redefining the human organism's environment to include the things people say to themselves.

- The same rules of conditioning that apply to the external environment also apply to that internal environment.

- Skinner created a logical and self-consistent system that continues to have a small but vocal minority of adherents today.

- Operant Conditioning (Video)

- Shows aspects of operant conditioning